In this tutorial we will understand how to setup a Pivotal Greenplum 6 database cluster with following set up on Linux 6.

Table of Contents

How to Install, Create and Configure Greenplum database 6.12 in Linux – Introduction

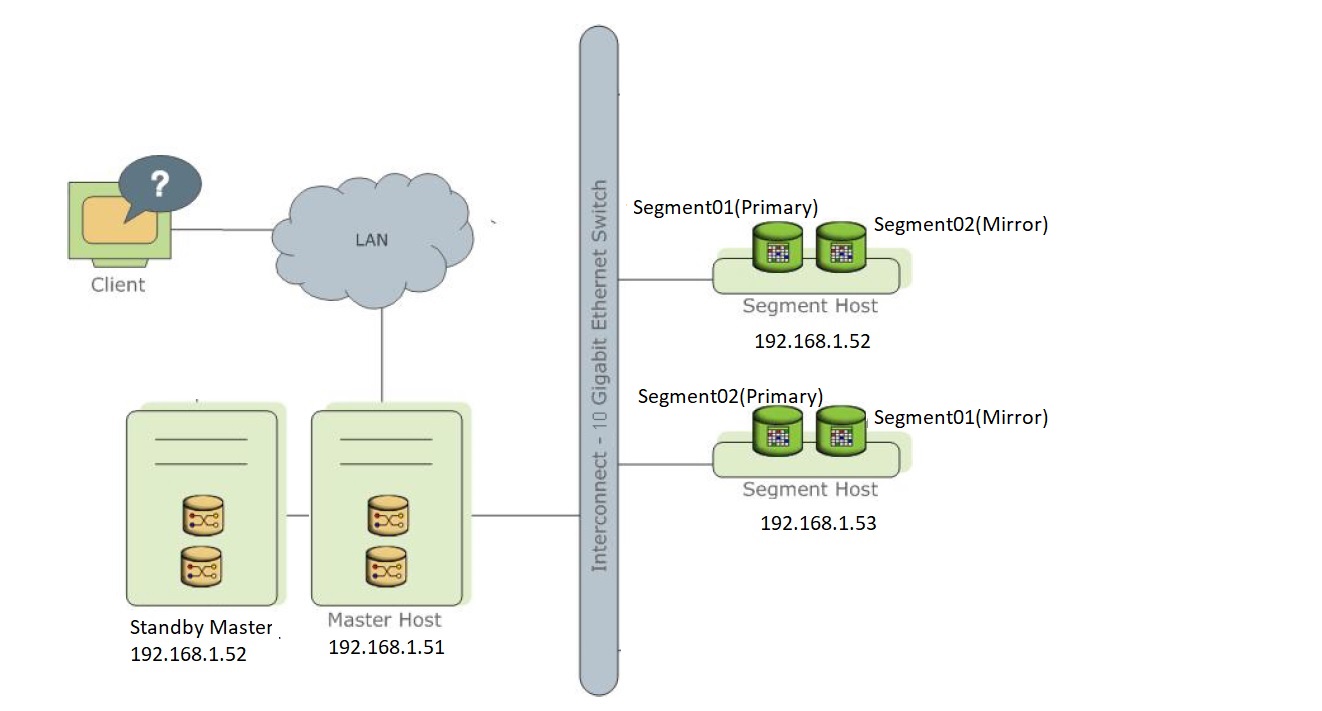

| IP Address | Hostname | Implementations-1 | Implementations-2 |

| 192.168.1.51 | m_node01 | master | |

| 192.168.1.52 | segnode01 | gpseg0(primary) | gpseg1(mirror), master standby |

| 192.168.1.53 | segnode02 | gpseg1(primary) | gpseg0(mirror) |

Introducing the terms defined:

Greenplum Database is a massively parallel processing (MPP) database server with an architecture specially designed to manage large-scale analytic data warehouses and business intelligence workloads.

MPP (also known as a shared nothing architecture) refers to systems with two or more processors that cooperate to carry out an operation, each processor with its own memory, operating system and disks.

Greenplum uses this high-performance system architecture to distribute the load of multi-terabyte data warehouses, and can use all of a system’s resources in parallel to process a query.

Greenplum Master

The Greenplum Database master is the entry to the Greenplum Database system, accepting client connections and SQL queries, and distributing work to the segment instances.

Greenplum Database end-users interact with Greenplum Database (through the master) as they would with a typical PostgreSQL database.

Master Mirroring/Standby Master

You can deploy a backup or mirror of the master instance on a separate host machine or on the same host machine. A backup master or standby master serves as a warm standby if the primary master becomes nonoperational.

Greenplum Segments

Greenplum Database segment instances are independent PostgreSQL databases that each store a portion of the data and perform the majority of query processing.

When a user connects to the database via the Greenplum master and issues a query, processes are created in each segment database to handle the work of that query.

Segment Mirroring

When Greenplum Database High Availability is enabled, there are two types of segments: primary and mirror.

Each primary segment has one corresponding mirror segment.

A primary segment receives requests from the master to make changes to the segment’s database and then replicates those changes to the corresponding mirror.

If a primary segment becomes unavailable, database queries fail over to the mirror segment.

Segment mirroring employs a physical file replication scheme—data file I/O at the primary is replicated to the secondary so that the mirror’s files are identical to the primary’s files.

In our example., we are going to have the below set up

| IP Address | Hostname | Implementations-1 | Implementations-2 |

| 192.168.1.51 | m_node01 | master | |

| 192.168.1.52 | segnode01 | gpseg0(primary) | gpseg1(mirror), master standby |

| 192.168.1.53 | segnode02 | gpseg1(primary) | gpseg0(mirror) |

- We use 192.168.1.51 as a master and 192.168.1.52 as a standby server.

- We use 192.168.1.52 as a segment 1 and 192.168.1.53 for its standby(replication)

- We use 192.168.1.53 as a segment 2 and 192.168.1.52 for its standby(replication)

We will divide the installation into three steps

- Pre-requisites installation or preparing the environment

- Greenplum software installation

- Configuring database setup

How to Install, Create and Configure Greenplum database in Linux : Pre-requisites

Perform the following tasks in order:

1. Make sure your host systems meet the requirements described in Platform Requirements.

The following is the configuration that I used on each of my machine.

| CPU | x86_64 |

| Memory | 2GB |

| Hard Disk | 20 GB |

2. Disable SELinux and firewall software.

If SELinux is not disabled, disable it by editing the /etc/selinux/config file.

SELINUX=disabled

|

1 2 3 4 5 6 7 8 9 10 11 12 |

[root@mnode ~]# cat /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of these two values: # targeted - Targeted processes are protected, # mls - Multi Level Security protection. SELINUXTYPE=targeted |

Ensure that SELinux is disabled (as a root user)

/sbin/chkconfig iptables off

/sbin/chkconfig –list iptables

|

1 2 3 |

[root@mnode ~]# /sbin/chkconfig iptables off [root@mnode ~]# /sbin/chkconfig --list iptables iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off |

3. Set the required operating system parameters.

Set the parameters in the /etc/sysctl.conf file and reload with sysctl -p:

# kernel.shmall = _PHYS_PAGES / 2 # See Shared Memory Pages

kernel.shmall = 197951838

# kernel.shmmax = kernel.shmall * PAGE_SIZE

kernel.shmmax = 810810728448

kernel.shmmni = 4096

vm.overcommit_memory = 2 # See Segment Host Memory

vm.overcommit_ratio = 95 # See Segment Host Memory

net.ipv4.ip_local_port_range = 10000 65535 # See Port Settings

kernel.sem = 500 2048000 200 4096

kernel.sysrq = 1

kernel.core_uses_pid = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.msgmni = 2048

net.ipv4.tcp_syncookies = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_max_syn_backlog = 4096

net.ipv4.conf.all.arp_filter = 1

net.core.netdev_max_backlog = 10000

net.core.rmem_max = 2097152

net.core.wmem_max = 2097152

vm.swappiness = 10

vm.zone_reclaim_mode = 0

vm.dirty_expire_centisecs = 500

vm.dirty_writeback_centisecs = 100

vm.dirty_background_ratio = 0 # See System Memory

vm.dirty_ratio = 0

vm.dirty_background_bytes = 1610612736

vm.dirty_bytes = 4294967296

fs.file-max = 65536

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[root@mnode ~]# cat /etc/sysctl.conf # Kernel sysctl configuration file for Red Hat Linux # # For binary values, 0 is disabled, 1 is enabled. See sysctl(8) and # sysctl.conf(5) for more details. # Controls IP packet forwarding net.ipv4.ip_forward = 0 .. .. .. vm.dirty_background_bytes = 1610612736 vm.dirty_bytes = 4294967296 fs.file-max = 65536 |

/sbin/sysctl -p

|

1 |

[root@mnode ~]# /sbin/sysctl -p |

4. Set the security limits in /etc/security/limits.conf

* soft nofile 524288

* hard nofile 524288

* soft nproc 131072

* hard nproc 131072

|

1 2 3 4 5 6 7 8 9 10 11 |

[gpadmin@mnode ~]$ cat /etc/security/limits.conf .. .. * soft nofile 524288 * hard nofile 524288 * soft nproc 131072 * hard nproc 131072 # End of file |

4. Synchronize system clocks.

Ensure that the time sync is there in all the machines. For more details click here

5. Create the gpadmin account.

groupadd gpadmin

useradd gpadmin -r -m -g gpadmin

passwd gpadmin

|

1 2 3 4 5 6 7 8 9 10 |

[root@mnode ~]# groupadd gpadmin [root@mnode ~]# useradd gpadmin -r -m -g gpadmin [root@mnode ~]# passwd gpadmin Changing password for user gpadmin. New password: BAD PASSWORD: it is based on a dictionary word BAD PASSWORD: is too simple Retype new password: passwd: all authentication tokens updated successfully. [root@mnode ~]# |

Download Greenplum database software

wget https://github.com/greenplum-db/gpdb/releases/download/6.12.1/greenplum-db-6.12.1-rhel6-x86_64.rpm

chmod 755 greenplum-db-6.12.1-rhel6-x86_64.rpm

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

[root@dbapath ~]# [root@dbapath ~]# wget https://github.com/greenplum-db/gpdb/releases/download/6.12.1/greenplum-db-6.12.1-rhel6-x86_64.rpm --2020-12-23 21:53:02-- https://github.com/greenplum-db/gpdb/releases/download/6.12.1/greenplum-db-6.12.1-rhel6-x86_64.rpm Resolving github.com... 13.234.176.102 Connecting to github.com|13.234.176.102|:443... connected. HTTP request sent, awaiting response... 302 Found Location: https://github-production-release-asset-2e65be.s3.amazonaws.com/44781140/bbcf0280-2d7d-11eb-8c7c-e1ba62bfabe6?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20201223%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201223T162504Z&X-Amz-Expires=300&X-Amz-Signature=6ff2218d38b87dbb2d09e5eda42380866531458041f4f8f7dc93bffe8e94759a&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=44781140&response-content-disposition=attachment%3B%20filename%3Dgreenplum-db-6.12.1-rhel6-x86_64.rpm&response-content-type=application%2Foctet-stream [following] --2020-12-23 21:53:28-- https://github-production-release-asset-2e65be.s3.amazonaws.com/44781140/bbcf0280-2d7d-11eb-8c7c-e1ba62bfabe6?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20201223%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201223T162504Z&X-Amz-Expires=300&X-Amz-Signature=6ff2218d38b87dbb2d09e5eda42380866531458041f4f8f7dc93bffe8e94759a&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=44781140&response-content-disposition=attachment%3B%20filename%3Dgreenplum-db-6.12.1-rhel6-x86_64.rpm&response-content-type=application%2Foctet-stream Resolving github-production-release-asset-2e65be.s3.amazonaws.com... 52.216.89.244 Connecting to github-production-release-asset-2e65be.s3.amazonaws.com|52.216.89.244|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 70272016 (67M) [application/octet-stream] Saving to: “greenplum-db-6.12.1-rhel6-x86_64.rpm” 100%[=============================================================================================>] 70,272,016 1.21M/s in 53s 2020-12-23 21:54:47 (1.27 MB/s) - “greenplum-db-6.12.1-rhel6-x86_64.rpm” saved [70272016/70272016] [root@mnode ~]# chmod 755 greenplum-db-6.12.1-rhel6-x86_64.rpm |

Install the rpm

rpm -ivh greenplum-db-6.12.1-rhel6-x86_64.rpm

|

1 2 3 4 5 6 |

[root@mnode ~]# rpm -ivh greenplum-db-6.12.1-rhel6-x86_64.rpm error: Failed dependencies: krb5-devel is needed by greenplum-db-6-6.12.1-1.el6.x86_64 libyaml is needed by greenplum-db-6-6.12.1-1.el6.x86_64 libevent2 is needed by greenplum-db-6-6.12.1-1.el6.x86_64 [root@mnode ~]# |

In my case, I got some failed dependencies, I installed them one by one.

yum install -y krb5-devel

yum install -y libyaml

yum install -y libevent2

Then I installed Greenplum software

[root@mnode ~]#

[root@mnode ~]# rpm -ivh greenplum-db-6.12.1-rhel6-x86_64.rpm

Preparing… ########################################### [100%]

1:greenplum-db-6 ########################################### [100%]

[root@mnode ~]#

Greenplum database software – Post Installation steps

- Stop the virtual machine and clone it to two more machines.

- Set IP addresses as mentioned above

Now your setup should look like

| IP Address | Hostname |

| 192.168.1.51 | m_node01 |

| 192.168.1.52 | segnode01 |

| 192.168.1.53 | segnode02 |

Edit /etc/hosts file by adding below three entries and ensure it is copied in all three machines.

192.168.1.51 mnode mnode

192.168.1.52 segnode01 segnode01

192.168.1.53 segnode02 segnode02

|

1 2 3 4 5 6 7 |

[gpadmin@mnode ~]$ cat /etc/hosts 192.168.1.51 mnode mnode 192.168.1.52 segnode01 segnode01 192.168.1.53 segnode02 segnode02 #127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 [gpadmin@mnode ~]$ |

Setup passwordless connectivity between three machines

su gpadmin

ssh-keygen -t rsa -b 4096

Generating public/private rsa key pair.

Enter file in which to save the key (/home/gpadmin/.ssh/id_rsa):

Created directory ‘/home/gpadmin/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

[gpadmin@mnode ~]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/gpadmin/.ssh/id_rsa): Created directory '/home/gpadmin/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/gpadmin/.ssh/id_rsa. Your public key has been saved in /home/gpadmin/.ssh/id_rsa.pub. The key fingerprint is: d1:2b:05:ae:d2:85:70:be:e1:49:17:f4:45:c8:1e:79 gpadmin@mnode The key's randomart image is: +--[ RSA 2048]----+ | . ..+. +o | | + o ==.E | | = =.+o | | + B o.. | | . * S . | | . . | | | | | | | +-----------------+ [gpadmin@mnode ~]$ |

Source the path file in the Greenplum Database installation directory.

echo “source /usr/local/greenplum-db/greenplum_path.sh” >> .bashrc

echo “export MASTER_DATA_DIRECTORY=/data/master/gpseg-1” >> .bashrc

source .bashrc

|

1 2 3 |

[gpadmin@mnode ~]$ echo "source /usr/local/greenplum-db/greenplum_path.sh" >> .bashrc [gpadmin@mnode ~]$ echo "export MASTER_DATA_DIRECTORY=/data/master/gpseg-1" >> .bashrc [gpadmin@mnode ~]$ source .bashrc |

Use the ssh-copy-id command to add the gpadmin user’s public key to the authorized_hosts SSH file on every other host in the cluster.

ssh-copy-id segnode01

ssh-copy-id segnode02

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[gpadmin@mnode ~]$ ssh-copy-id segnode01 The authenticity of host 'segnode01 (192.168.1.52)' can't be established. RSA key fingerprint is a8:7e:3b:c8:e6:00:22:62:25:0c:66:55:44:17:d3:5f. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'segnode01,192.168.1.52' (RSA) to the list of known hosts. gpadmin@segnode01's password: Now try logging into the machine, with "ssh 'segnode01'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [gpadmin@mnode ~]$ [gpadmin@mnode ~]$ ssh segnode01 [gpadmin@segnode01 ~]$ |

Repeat the steps in all machines so that you have passwordless connectivity beween all the three machines.

In the gpadmin home directory, create a file named hostfile_exkeys that has the machine

configured host names and host addresses (interface names) for each host in your Greenplum system

[gpadmin@mnode ~]$ cat hostfile_exkeys

mnode

segnode01

segnode02

Run the gpssh-exkeys utility with your hostfile_exkeys file to enable n-n passwordless SSH for the gpadmin user.

gpssh-exkeys -f hostfile_exkeys

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

[gpadmin@mnode ~]$ gpssh-exkeys -f hostfile_exkeys [STEP 1 of 5] create local ID and authorize on local host ... /home/gpadmin/.ssh/id_rsa file exists ... key generation skipped [STEP 2 of 5] keyscan all hosts and update known_hosts file [STEP 3 of 5] retrieving credentials from remote hosts ... send to segnode01 ... send to segnode02 [STEP 4 of 5] determine common authentication file content [STEP 5 of 5] copy authentication files to all remote hosts ... finished key exchange with segnode01 ... finished key exchange with segnode02 [INFO] completed successfully [gpadmin@mnode ~]$ |

Confirming Your Installation in all the three machines

gpssh -f hostfile_exkeys -e ‘ls -l /usr/local/greenplum-db-<version>’

|

1 2 3 4 5 6 7 8 9 10 |

[gpadmin@mnode ~]$ [gpadmin@mnode ~]$ gpssh -f hostfile_exkeys -e 'ls -l /usr/local/greenplum-db' [segnode02] ls -l /usr/local/greenplum-db [segnode02] lrwxrwxrwx 1 root root 30 Dec 27 02:03 /usr/local/greenplum-db -> /usr/local/greenplum-db-6.12.1 [segnode01] ls -l /usr/local/greenplum-db [segnode01] lrwxrwxrwx 1 root root 30 Dec 27 02:03 /usr/local/greenplum-db -> /usr/local/greenplum-db-6.12.1 [ mnode] ls -l /usr/local/greenplum-db [ mnode] lrwxrwxrwx 1 root root 30 Dec 27 02:03 /usr/local/greenplum-db -> /usr/local/greenplum-db-6.12.1 [gpadmin@mnode ~]$ [gpadmin@mnode ~]$ |

Creating data directories for master and segment nodes(as a root)

Creating master directory in master node and segnode01(for master standby)

(as root)

chown gpadmin:gpadmin /data

mkdir -p /data/master

chown -R gpadmin:gpadmin /data

(as gpadmin user)

source /usr/local/greenplum-db/greenplum_path.sh

gpssh -h segnode01 -e ‘mkdir -p /data/master’

gpssh -h segnode01 -e ‘chown gpadmin:gpadmin /data/master’

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

[root@mnode ~]# chown gpadmin:gpadmin /data [root@mnode ~]# mkdir -p /data/master [root@mnode ~]# chown -R gpadmin:gpadmin /data [root@mnode ~]# [root@mnode ~]# [root@mnode ~]# exit logout [gpadmin@mnode ~]$ source /usr/local/greenplum-db/greenplum_path.sh [gpadmin@mnode ~]$ gpssh -h segnode01 -e 'mkdir -p /data/master' [segnode01] mkdir -p /data/master [gpadmin@mnode ~]$ gpssh -h segnode01 -e 'chown gpadmin:gpadmin /data/master' [segnode01] chown gpadmin:gpadmin /data/master [gpadmin@mnode ~]$ |

Creating Data Storage Areas on Segment Hosts

create a file hostfile_gpssh_segonly with all the segments nodes list

[gpadmin@mnode ~]$ cat hostfile_gpssh_segonly

segnode01

segnode02

[gpadmin@mnode ~]$

Using gpssh, create the primary and mirror data directory locations on all segment hosts at once using the hostfile_gpssh_segonly file you just created.

source /usr/local/greenplum-db/greenplum_path.sh

gpssh -f hostfile_gpssh_segonly -e ‘mkdir -p /data/primary’

gpssh -f hostfile_gpssh_segonly -e ‘mkdir -p /data/mirror’

gpssh -f hostfile_gpssh_segonly -e ‘chown -R gpadmin /data/*’

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[gpadmin@mnode ~]$ source /usr/local/greenplum-db/greenplum_path.sh [gpadmin@mnode ~]$ [gpadmin@mnode ~]$ gpssh -f hostfile_gpssh_segonly -e 'mkdir -p /data/primary' [segnode01] mkdir -p /data/primary [segnode02] mkdir -p /data/primary [gpadmin@mnode ~]$ [gpadmin@mnode ~]$ gpssh -f hostfile_gpssh_segonly -e 'mkdir -p /data/mirror' [segnode02] mkdir -p /data/mirror [segnode01] mkdir -p /data/mirror [gpadmin@mnode ~]$ [gpadmin@mnode ~]$ gpssh -f hostfile_gpssh_segonly -e 'chown -R gpadmin /data/*' [segnode02] chown -R gpadmin /data/* [segnode01] chown -R gpadmin /data/* [gpadmin@mnode ~]$ [gpadmin@mnode ~]$ |

Initializing a Greenplum Database System

Creating the Greenplum Database Configuration File. Create gpinitsystem_config file with below configurations.

ARRAY_NAME=”Greenplum”

MACHINE_LIST_FILE=./hostfile

SEG_PREFIX=gpseg

PORT_BASE=50000

declare -a DATA_DIRECTORY=(/data/primary)

MASTER_HOSTNAME=mnode

MASTER_DIRECTORY=/data/master

MASTER_PORT=5432

TRUSTED_SHELL=ssh

CHECK_POINT_SEGMENTS=8

ENCODING=UNICODE

MACHINE_LIST_FILE=/home/gpadmin/hostfile_gpssh_segonly

MIRROR_PORT_BASE=7000

declare -a MIRROR_DATA_DIRECTORY=(/data/mirror)

Run the initialization utility

gpinitsystem -c ~/gpinitsystem_config -s segnode01

- Prompt yes(Y) when requested.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

[gpadmin@mnode ~]$ gpinitsystem -c ~/gpinitsystem_config -s segnode01 20201227:02:54:49:007449 gpinitsystem:mnode:gpadmin- gpinitsystem:mnode:gpadmin-[INFO]:-Checking new segment hosts, Completed gpinitsystem:mnode:gpadmin-[INFO]:-Greenplum Database Creation Parameters gpinitsystem:mnode:gpadmin-[INFO]:--------------------------------------- gpinitsystem:mnode:gpadmin-[INFO]:-Master Configuration gpinitsystem:mnode:gpadmin-[INFO]:--------------------------------------- gpinitsystem:mnode:gpadmin-[INFO]:-Master instance name = Greenplum gpinitsystem:mnode:gpadmin-[INFO]:-Master hostname = mnode gpinitsystem:mnode:gpadmin-[INFO]:-Master port = 5432 gpinitsystem:mnode:gpadmin-[INFO]:-Master instance dir = /data/master/gpseg-1 gpinitsystem:mnode:gpadmin-[INFO]:-Master LOCALE = en_US.utf8 gpinitsystem:mnode:gpadmin-[INFO]:-Greenplum segment prefix = gpseg gpinitsystem:mnode:gpadmin-[INFO]:-Master Database = gpinitsystem:mnode:gpadmin-[INFO]:-Master connections = 250 gpinitsystem:mnode:gpadmin-[INFO]:-Master buffers = 128000kB gpinitsystem:mnode:gpadmin-[INFO]:-Segment connections = 750 gpinitsystem:mnode:gpadmin-[INFO]:-Segment buffers = 128000kB gpinitsystem:mnode:gpadmin-[INFO]:-Checkpoint segments = 8 gpinitsystem:mnode:gpadmin-[INFO]:-Encoding = UNICODE gpinitsystem:mnode:gpadmin-[INFO]:-Postgres param file = Off [INFO]:-Checking configuration parameters, please wait... .. .. gpinitsystem:mnode:gpadmin-[INFO]:---------------------------------------- gpinitsystem:mnode:gpadmin-[INFO]:-Greenplum Primary Segment Configuration gpinitsystem:mnode:gpadmin-[INFO]:---------------------------------------- gpinitsystem:mnode:gpadmin-[INFO]:-segnode01 50000 segnode01 /data/primary/gpseg0 2 gpinitsystem:mnode:gpadmin-[INFO]:-segnode02 50000 segnode02 /data/primary/gpseg1 3 gpinitsystem:mnode:gpadmin-[INFO]:--------------------------------------- gpinitsystem:mnode:gpadmin-[INFO]:-Greenplum Mirror Segment Configuration gpinitsystem:mnode:gpadmin-[INFO]:--------------------------------------- gpinitsystem:mnode:gpadmin-[INFO]:-segnode02 7000 segnode02 /data/mirror/gpseg0 4 gpinitsystem:mnode:gpadmin-[INFO]:-segnode01 7000 segnode01 /data/mirror/gpseg1 5 .. .. Continue with Greenplum creation Yy|Nn (default=N): > Y .. .. gpstart:mnode:gpadmin-[INFO]:-Successfully started 2 of 2 segment instances 20201227:02:55:47:007449 gpinitsystem:mnode:gpadmin- [INFO]:------------------------------------------------------- [gpadmin@mnode ~]$ |

Create a database

psql -c “create database gpadmin” template1

psql -c “alter user gpadmin password ‘gpadmin'”

echo “host all all 0.0.0.0/0 md5” >> /data/master/gpseg-1/pg_hba.conf

gpstop -u

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

[gpadmin@mnode data]$ psql -c "create database gpadmin" template1 CREATE DATABASE [gpadmin@mnode data]$ psql -c "alter user gpadmin password 'gpadmin'" ALTER ROLE [gpadmin@mnode data]$ echo "host all all 0.0.0.0/0 md5" >> /data/master/gpseg-1/pg_hba.conf [gpadmin@mnode data]$ gpstop -u 20201227:02:58:57:010447 gpstop:mnode:gpadmin-[INFO]:-Starting gpstop with args: -u 20201227:02:58:57:010447 gpstop:mnode:gpadmin-[INFO]:-Gathering information and validating the environment... 20201227:02:58:57:010447 gpstop:mnode:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information 20201227:02:58:57:010447 gpstop:mnode:gpadmin-[INFO]:-Obtaining Segment details from master... 20201227:02:58:57:010447 gpstop:mnode:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 6.12.1 build commit:7ec4678f29dd922d7d44501f5fc344b5d0d4d49f Open Source' 20201227:02:58:57:010447 gpstop:mnode:gpadmin-[INFO]:-Signalling all postmaster processes to reload [gpadmin@mnode data]$ |

Check the setup with the following comamnds

|

1 2 3 4 5 6 7 8 9 10 |

gpadmin=# select dbid, port, hostname, address, datadir from gp_segment_configuration; dbid | port | hostname | address | datadir ------+-------+-----------+-----------+---------------------- 1 | 5432 | mnode | mnode | /data/master/gpseg-1 2 | 50000 | segnode01 | segnode01 | /data/primary/gpseg0 4 | 7000 | segnode02 | segnode02 | /data/mirror/gpseg0 3 | 50000 | segnode02 | segnode02 | /data/primary/gpseg1 5 | 7000 | segnode01 | segnode01 | /data/mirror/gpseg1 6 | 5432 | segnode01 | segnode01 | /data/master/gpseg-1 (6 rows) |

to see the mirror segments in the system and their status:

|

1 |

gpstate -m |

|

1 2 3 4 5 6 7 8 9 10 |

.... [INFO]:-Obtaining Segment details from master... [INFO]:-------------------------------------------------------------- [INFO]:--Current GPDB mirror list and status [INFO]:--Type = Spread [INFO]:-------------------------------------------------------------- [INFO]:- Mirror Datadir Port Status Data Status [INFO]:- segnode02 /data/mirror/gpseg0 7000 Passive Synchronized [INFO]:- segnode01 /data/mirror/gpseg1 7000 Passive Synchronized [INFO]:-------------------------------------------------------------- |

To see the primary to mirror segment mappings:

|

1 |

gpstate -c |

[INFO]:-Obtaining Segment details from master…

[INFO]:————————————————————–

[INFO]:–Current GPDB mirror list and status

[INFO]:–Type = Spread

[INFO]:————————————————————–

[INFO]:- Status Data State Primary Datadir Port Mirror Datadir Port

[INFO]:- Primary Active, Mirror Available Synchronized segnode01 /data/primary/gpseg0 50000 segnode02 /data/mirror/gpseg0 7000

[INFO]:- Primary Active, Mirror Available Synchronized segnode02 /data/primary/gpseg1 50000 segnode01 /data/mirror/gpseg1 7000

[INFO]:————————————————————–

To see the status of the standby master mirror:

|

1 |

gpstate -f |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

[INFO]:-Obtaining Segment details from master... [INFO]:-Standby master details [INFO]:----------------------- [INFO]:- Standby address = segnode01 [INFO]:- Standby data directory = /data/master/gpseg-1 [INFO]:- Standby port = 5432 [INFO]:- Standby PID = 2291 [INFO]:- Standby status = Standby host passive [INFO]:-------------------------------------------------------------- [INFO]:--pg_stat_replication [INFO]:-------------------------------------------------------------- [INFO]:--WAL Sender State: streaming [INFO]:--Sync state: sync [INFO]:--Sent Location: 0/C004F50 [INFO]:--Flush Location: 0/C004F50 [INFO]:--Replay Location: 0/C004F50 [INFO]:-------------------------------------------------------------- |

Thank you for giving your valuable time to read the above information. I hope the content served your purpose in reaching out the blog.

Suggestions for improvement of the blog are highly appreciable. Please contact us for any information/suggestion/feedback.

If you want to be updated with all our articles

please follow us on Facebook | Twitter

Please subscribe to our newsletter.

Thank you for putting this up!